Brief intro

In this article, we will discuss

creating clustered VMs on the VMware’s ESX 3 Server. There are several options for

doing so. You can create a cluster on a single ESX host. This is generally the

easiest way of doing things. However, you might want to test and spread your

nodes out across ESX hosts. That is possible as well. Or, if you do have a test

or development RAC on physical hardware and are willing to move your RAC to a Virtual

Infrastructure, you can go on and add another node; but this time just on the

VI3 stack. By gradually deleting nodes from the physical infrastructure and

adding nodes to the VI3 infrastructure, you will migrate your RAC to VI3!

Clustering: Application Clustering vs. VirtualCenter Clustering

We won’t delve too much into

clustering concepts here; you basically use cluster technology in order to

achieve high availability or/and scalability. We can cluster nodes by the

means of software and hardware clustering solutions. Typical applications like

Oracle RAC come to mind when thinking of cluster-aware applications. A typical

clustering setup would be a couple of nodes, sharing the same disk, much like

our Oracle RAC, mail servers, etc. The shared disks (LUNs: Logical Unit Number)

must reside on a SAN using Fiber Channel (FC). Usually you have an extra

network connection for cluster heartbeat traffic, much the same as Cache Fusion

for Oracle RAC.

Also, you have traditional

clustering against the Virtual Center Clustering. VMware’s HA (High

Availability) option is a cold way of clustering nodes within the Virtual Center

clusters. Traditional clustering uses a hot standby node while the Virtual

Center HA is a cold stand by approach.

Clustering Options in VMware ESX 3 Server

Testing

Oracle RAC’s installation and even doing a demo to the audience at a client

site is fine, but if you want to try migrations, upgrades or patches, it is

advisable to use ESX Server. In fact, most of my readers are ESX users, and DBAs

who want to learn their RAC are increasingly deploying Oracle RAC in their

test, development and even staging environments. Production environments are

not yet running RAC on ESX but I won’t be surprised if there are environments

that have Oracle RAC on VMware’s ESX. I have seen a reasonably intensive single

node Oracle on ESX. Moreover, the OS was Windows!

Coming

back to the types of clustering for your Oracle RAC options:

1. Clustering Oracle RAC on single ESX host

2. Clustering Oracle RAC across several ESX hosts

3. Clustering Oracle RAC across ESX and physical nodes

Clustering RAC on single ESX host

The

steps are simple here. We first create our first node.

1.

Start your VI

client and log on to the Virtual Center or the ESX Host itself.

2.

Create new machine

and select “custom”.

3.

RAC Resource

pool: If you want your RAC to run within a specific pool that has high CPU

shares then you will use this to create your VMs.

4.

Datastore: It

could be on your SAN (Storage Area Network) or DAS (Direct Attached Storage).

For Linux/Unix, I usually create two disks. One 10G and the other with 4G for

swap.

5.

Guest OS:

Choose your OS.

6.

CPU: You can

choose up to 4 vCPUs.

7.

Memory: You

can allocate up to 16G per VM.

8.

Network: We

create two NICs. One for public and the other for private.

9.

Install your

OS (Operating System)

10. Add additional nodes for shared

storage.

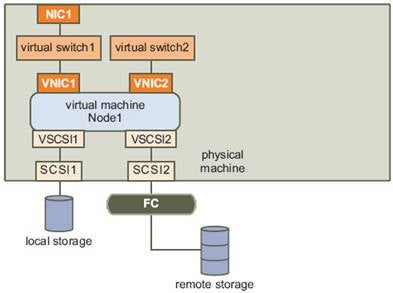

Eventually

depending on your clustering preferences it will look like this after the first

node creation:

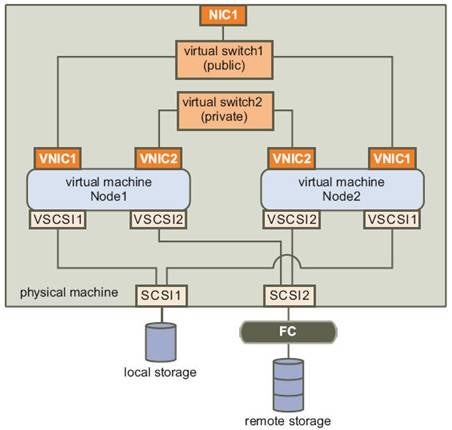

The final picture might look like this; do note that we

created virtual switches in the ESX that allow for high speed interconnects

while staying totally disconnected from the physical NIC of the ESX server. The

public virtual switch connects the nodes to the application via the hosts NICs

:

As you see, this is not hard to achieve. Following simple

tasks you can have your virtual machines up and running in no time. You create

and customize your first node, create the second node, add storage (including

shared storage) and configure the IP addresses.

How to create shared disks on the ESX host?

This isn’t that hard either. Just execute the following steps on the

ESX host:

1.

Logged into ESX as root on the

remote secure shell, you could use putty on windows or simple ssh command on

your Linux OS.

Create and Zero out the disks using the following command:

vmkfstools -c <size> -d eagerzeroedthick /vmfs/volumes/<mydir>/<myDisk>.vmdk. Example: vmkfstools -c 10Gb -d eagerzeroedthick /vmfs/volumes/RACShare/asm01.vmdk

2.

Zeroing out an existing disk can

be done : vmkfstools [-w |–writezeroes] /vmfs/volumes/<mydir>/<myDisk>.vmdk

3.

Open edit settings for node one

and select add existing disk; browse to the datastore and create a new SCSI

device by choosing SCSI(1:0)

4.

Upon adding your first shared disk

you will notice that a new SCSI controller is created.

5.

Add all the vmdk’s you created: ocr.vmdk,

votingdisk.vmdk, spfileasm.vmdk, asm01.vmdk, asm02.vmdk (for Oradata),

asm03.vmdk, asm04.vmdk (for Flash Recovery Area)

6.

Repeat these steps on all of the

nodes.

7.

Select the SCSI controller , in

the edit settings pane, check if the controller type is set to LsiLogic

8.

In the same panel set the SCSI bus

sharing to Virtual (as illustrated)

* : In the Solaris 10 installation for the Oracle RAC 10g R2 testbed, I encountered a funny problem. After having installed the OS and configuring all of the memory limits, ssh equivalency, I then moved on to cloning my VM (which was fully prepared) on the Virtual Center. Then I added shared storage and upon restarting the OS, I lost my vmxnet0 NIC. This happened because I added another SCSI controller for the shared disks and the PCI slots shifted, making the OS to assume that the NIC was removed. So in Solaris RAC on ESX, make sure that you add all of the disks while creating the VM and then proceed to install the OS.

Note:

1.

Strangely enough, even after

checking the type LsiLogic, you get a warning (per shared disk on that

controller) regarding the type. Click yes to all of them.

2.

Use Virtual Center to clone the fully ready node and save configurations, which you

can always fall back on should things go wrong.

3.

If you don’t have Virtual Center, then use VMware Converter to make a backup copy and also create online

clones on the ESX machine.

Conclusion

In the next installment, we will explore the Shared Storage considerations across the ESX

hosts and also Oracle RAC clustering between VM on ESX and on an Oracle node on

a physical host.